The next wave of AI Is more than just models

Byline: Augusto Marietti (CEO & Co-Founder, Kong), YJ Lu (Director, Teachers’ Venture Growth), and Yiran Wu (Investment Analyst, Teachers’ Venture Growth)

At a glance

Teachers' Venture Growth and Kong's CEO and Co-founder, Augusto Marietti as special guest author, dive into how the Model Context Protocol (MCP) is emerging as an infrastructure shift for scalable and interoperable intelligence.

AI is moving from standalone models to contextual, agentic systems that can autonomously set goals and execute tasks by connecting to real-world data, tools and actions through the Model Context Protocol (MCP).

As a neutrally governed industry standard, MCP is becoming critical infrastructure for agentic workflows, enabling an ecosystem shift similar to APIs.

Context is the new compute

Over the past few years, AI has advanced at an unprecedented pace. We’ve seen the leap from traditional machine learning systems to generative AI models that can write, code and reason with human-like fluency. This shift has fully transformed how we engage with AI, but the journey doesn’t end there.

We’re now entering a new stage of contextual, agentic AI, which can autonomously set goals and execute tasks with minimal human intervention. At the foundation of this transformation lies the Model Context Protocol (MCP), the emerging standard helping to connect prompt-based GenAI models to real-world data, tools and actions.

Previous episode: Context not found (404)

Until recently, most frontier large language models (LLMs) operated in a “walled garden.” They could receive and interpret user prompts to generate text, but had no direct, standardized way of accessing personal or company-specific data, internal tools, application programming interfaces (APIs) or any other sources that would provide them with the context or background needed to deliver better, well-informed responses.

To make context-driven responses possible, enterprises previously had to build custom integrations (one-time “glue codes”) that were often brittle, expensive and painful to maintain. Then, in November 2024, Anthropic introduced MCP as an open-source framework that aimed to help frontier models generate more-relevant responses by bringing context into LLMs based on a common protocol. It standardizes how AI models discover, call and authenticate APIs from external systems, replacing fragile integrations with a common framework.

The protocol quickly became an industry standard adopted by many leading players such as OpenAI and Google as part of their AI stacks. As the ecosystem matures, AI systems will be able to maintain context as they move between different tools via a sustainable architecture.

Okay…But How Does This Work?

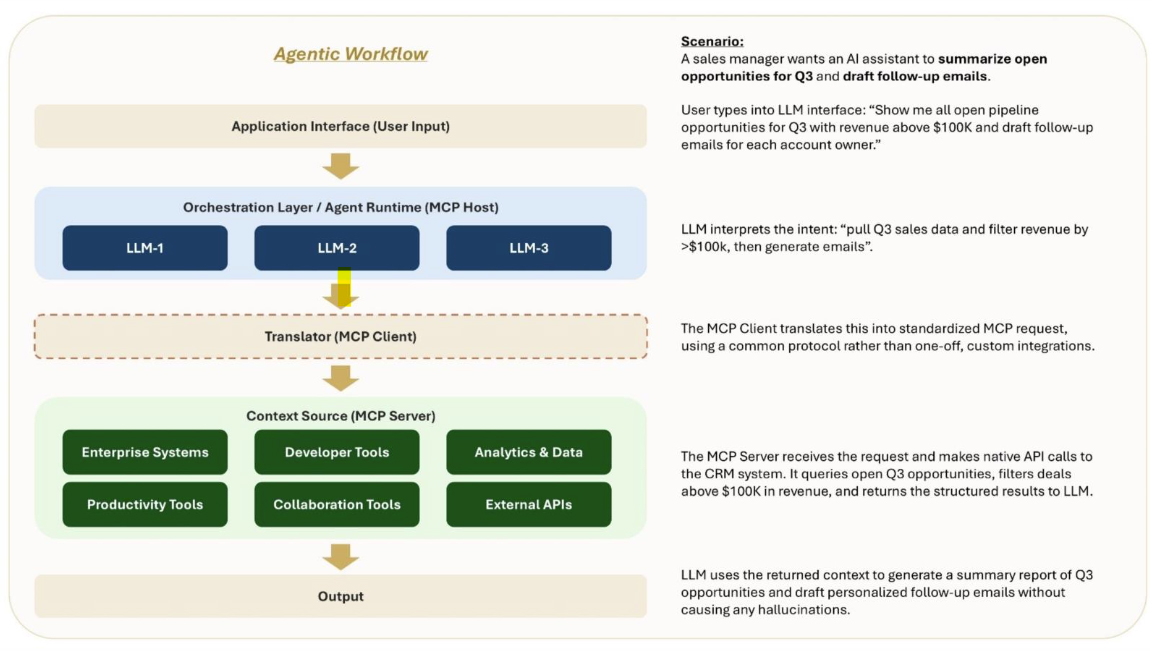

Before MCP, every LLM had its own plug-in format and required custom glue code to talk to every tool. This created a scaling nightmare, fragmenting the ecosystem into N × M integrations. MCP collapses that matrix into a vendor-neutral system by defining clear roles (hosts, client and server) such that tools can be discovered and called in a consistent, reusable way. Build one MCP server per context source, and any MCP-compliant host AI assistants and chatbots can use it like a tool from a toolbox.

The diagram below shows this in action. A user enters a prompt, the model interprets their intent and, very crucially, instead of taking a guess, it reaches out to real context for further clarification. The MCP client acts as the translator, converting the user’s intent into standardized MCP requests. MCP servers then handle the actual execution: exposing secured, auditable access to context sources such as CRMs, analytics tools, dev platforms, and productivity apps, and making the underlying API calls on the model’s behalf. These systems return structured results, which the models use to generate useful, context-aware outputs rather than a plausible-sounding paragraph. Most importantly, you don’t need to write N x M pieces of code to do this.

In the Footsteps of APIs, But with Bigger Shoes

This isn’t the first time technology has needed a common standard to unlock scale. Previously, software needed a common language to connect systems. APIs were the contracts that allowed one piece of software to talk to another – defining what can be asked, what gets returned and how to securely exchange that information.

In the early days, APIs were mostly internal tools used by engineers to connect different systems within a company. By the early 2000s, companies (Amazon, eBay, Salesforce, etc.) began opening APIs to business partners and third-party developers. Over time, APIs became the foundation of how modern apps were built – powering everything from payment systems to cloud infrastructure to social media logins to your favorite weather app.

But APIs didn’t really take off until they became simple and standardized (with REST, JSON, OAuth and Open). Developers then finally had a clean, portable and predictable way to build a piece of software on top of other services. This unlocked entire businesses like Stripe, Twilio and Plaid, which monetize through and practically exist as APIs. MCP is poised to follow a similar path for AI by standardizing how models access tools and data. As MCP adoption grows, we’ll likely see similar things APIs once needed: registries, observability, approval systems, policy engines and better tooling.

Our Bets: Context + APIs + Workflows = Agentic AI

We’re making two important bets on the future of AI infrastructure. Anthropic’s Model Context Protocol is emerging as the industry standard protocol for connecting LLMs to the right tools and data and making agents communicate in plain English across various APIs, laying the groundwork for agentic workflows and, ultimately, agent-to-agent systems (A2A). Kong (see below) is extending its leadership in API management into AI connectivity, serving as the connective tissue between enterprise systems and the new generation of AI agents.

Anthropic

Anthropic introduced MCP as an open-source framework, recognizing that the future of AI depends not only on building ever-larger models, but also on connecting them to the right context. In May 2025, Anthropic announced Integrations – extending MCP support beyond Claude Desktop’s local servers and embedding it directly into Claude’s API. This upgrade allows Claude to connect seamlessly to any remote MCP server without code, enabling it to discover tools, execute calls, manage authentication and return integrated results. Looking ahead, Anthropic’s road map evolves from building stand-alone agents that act to enabling orchestration across multi-agent systems (A2A). In practice, this could be one agent retrieving client data, another running portfolio analysis and a third generating a compliance-ready report, all coordinated seamlessly through MCP and A2A. This evolution transforms AI from a single assistant into a network of specialized agents, unlocking higher-value, end-to-end business use cases.

Kong

Kong is extending its API management platform, Kong Konnect, into building a connectivity layer for AI, starting with two key capabilities: AI Gateway and MCP servers. The AI Gateway allows enterprises to route, secure, monitor and optimize calls to LLMs, MCP and API providers by treating AI inference end points like any other API, bringing speed, visibility and security controls to what is currently a black box. Meanwhile, the MCP server for Konnect exposes an organization’s system of record (APIs, services, traffic analytics, etc.) through MCP, allowing AI agents like Claude or Cursor to query Kong Konnect in natural language and retrieve actionable insights. Anchored in its vision of “No AI without APIs,” Kong’s platform is not only critical for today’s digital infrastructure, but increasingly indispensable in connecting the new agentic world.

MCP’s next chapter: Linux Foundation stewardship

In December 2025, Anthropic donated the Model Context Protocol to the newly established Agentic AI Foundation (AAIF), a consortium under the Linux Foundation. AAIF was co-founded by Anthropic, Block and OpenAI, with support from Google, Microsoft, AWS, Cloudflare and Bloomberg. The foundation launched with three founding contributions: Anthropic’s MCP, Block’s goose framework and OpenAI’s AGENTS.md. Anthropic’s MCP donation ensures the emerging industry standard is managed in a neutral, community-governed environment rather than under a singular company. This approach mirrors paths of major open-source projects such as Linux, Kubernetes, Node.js and PyTorch, whose adoption accelerated after shifting to independent stewardship within the Linux Foundation.

The transition also reflects how quickly MCP has become integrated in the AI ecosystem, with more than 97 million monthly SDK downloads, more than 10,000 active servers and deep integrations across Claude, ChatGPT, Gemini and many other AI products. Under the new stewardship, MCP will serve as a durable, vendor-neutral standard managed by an independent body, reducing lock-in risk while opening the protocol to contributions from the broader ecosystem. Alongside goose and AGENTS.md under AAIF, these projects define the coordination layer for agentic AI, positioning MCP from a practical developer framework to critical digital infrastructure for the next generation of agentic workflows.

An opportunity ahead

By giving models a common, neutrally governed language to access tools, data and systems, MCP reduces friction and unlocks interoperability, making it easier to build agentic workflows that are reliable and scalable. As adoption grows, we’ll see the same ecosystem effects that APIs unleashed two decades ago: new business models, new tooling and entirely new categories of applications built on top of the protocol. The difference this time? The stakes are higher, the pace is faster and the potential is bigger. MCP doesn’t just connect software to software – it establishes a foundation that connects AI to everything.

Of course, with that opportunity comes risk. As an immediate example, MCP introduces new security considerations, from tool misuse to data exposure, that require robust controls and governance. Building the ecosystem responsibly means balancing openness with safeguards, just as APIs evolved to include authentication standards, rate limiting and monitoring.

The story of MCP is still being written, but the lesson from APIs is clear: a standard alone doesn’t change the world. Standards create ecosystems, and ecosystems change industries. If successful, MCP will matter less as a product innovation and more as an infrastructural shift, one that changes how intelligence is packaged, shared, and scaled across the technology landscape for decades to come.